The DevOps that I used to know

I remember a past sysadmin life, just before the DevOps term became widespread, when starting a new project would always begin with a three or more sides conversation. In there there would be at least one developer, a project manager, a sysadmin -hello!-, the DBAs, and sometimes even a network admin. It would not be strange to also have some of the business analysts in the first talk. We would lay out the purpose of the project, all the constraints, the fixed and moving parts, scope et cetera.

I would usually have an immediate follow call or meeting with the developer for more fine grained details, including specifics about middle ware, configuration, file templates, naming conventions used and so on.

It was not strange to have a working development environment no later than half an hour after the second conversation, with all the parts created:

- Computer hosts for as many environments needed

- External data sections co-owned with the developer

- A configuration management policy for the application

- The documentation pages for the project in the internal wiki

- All access defined by different group levels

- The system and application monitoring

The developer was given a filesystem location to drop any packages created, which names had already been arranged and were predictable. They were given access via version control tools to a repository where they could modify configuration files templates and external data that filled in those templates. When adding static content, there was also a file server that followed the same structure and name conventions.

Within the first two days, given this setup, the developer could focus on their job straight away. Typically, they would produce a first release within days and start the test cycle very early. In most cases, there was a release to production within the first two weeks.

We could have the confidence because the environments were all the same, using the same infrastructure, same OS, same application container, packaged the same way, using the same configuration templates. It almost always errors were found in incorrect configuration values, easy to fix given that everything important was defined as external data, and each provider to the application operative environment was defined in a formal way. We could trust the system.

Whenever anything was not going well in production, we all knew it could hardly be an environmental issue, as, yes, it worked well in development. We could have the confidence because the environments were all the same, using the same infrastructure, same OS, same application container, packaged the same way, using the same configuration templates. It almost always errors were found in incorrect configuration values, easy to fix given that everything important was defined as external data, and each provider to the application operative environment was defined in a formal way. We could trust the system.

In that life, as a sysadmin, I got to know a lot a bout what the applications were doing, the developers got to know a great deal about how the operative environment worked, and we all shared the same documentation space. A documentation space that had rules that defined how the articles should look like, rules for access and a strict per-team organization.

I have never again seen QA people being so productive and happy. Imagine, they could test performance by simply dropping a new package version in a directory, and changing values via version control and waiting one minute for those changes to take effect.

The step to bring all these to production was very simple: it was actually the very same process. There was one tiny difference: Operations, who owned the production environment, had the turn key for changes. Everything was in version control, so, for production the versions where specific, instead of using the latest, so a successful series of tests in the QA and performance environments was a requirement. Almost nothing was left to chance, yet, it was extremely agile. The controls existed where they made business sense and the rest was as formal as possible in order to enable maximum automation. Very soon we were more focused in speed than anything else. In this setup, the feedback loops work extremely well, and soon everyone wanted this, but even faster.

Of course, it was not all perfect. This way of working required one thing: formality. And this means creating something radically different would take time at the beginning, as every aspect of the a paradigm had to be defined before using it. Say, for example that instead of Java, some development team decided that the new application had to be coded in Ruby. Of course we would have no ready to use model for hosting Ruby applications, so the story I just told you would not apply. Sometimes, parts of the infrastructure stack would be not ready for the hosted application. Some libraries would be too old, locations may differ, allegedly too many adjustments would be necessary. Also the developers decided that they do not need to create packages, and that the configuration will be shipped in a different way. All valid reasons for their application.

Enter the container. As the developers will be using a very different environment for their coding work, mostly different operating system, instead of having to know -or as some of them put it, care- about the operative environment where their application will live, now they can create a fishbowl, with the middle ware, the libraries, all the dependencies, configuration files and -cough even databases cough- inside. Then drop it into a repository of images ready to be installed in a bigger computer, which could have a very different setup, but as long as it runs the correct software to host and run that fishbowl image, it will work.

Basically, a development team with a Docker Engine could get there, that is to have their application deployed, sooner than by following a formal process. This is definitely disruptive, and it gets the things done.

Now, I can hear you thinking: — For sure this is a good thing, what is he complaining about?

Yes, it is, at least for some cases, in certain circumstances and for a quick solution to get something released, it is. I actually would advocate that it is a great solution to automate unit testing. However, I see a number of problems with our new current paradigm and the number one is that it is that containers are a return to the silos, they do not enable feedback loops, they become black boxes where immutability is preferred before introspection. Does it become an standard package that can be deploy to any machine a certain number of machines running certain OSs? Yes it does… but how many organizations do you know know where they have different OS running? For what I know we already have standard packages and for when you need a different one, tools like FPM come very handy. Any tooling you had for the operative environment is now not enough, you need a new ecosystem to inspect, monitor, manage and secure those containers. We are back again to square zero.

There is always at least one machine running at least one application, attached to some storage, and offering one or more services in the network they are connected to.

There are some undeniable advantages when using containers in front of using VMs, the two biggest ones are speed and performance. And arguably also platform independence. Yes, there have been lots of articles demonizing them for the wrong reasons. But I think most detractors get too fixated by attacking a certain container type without realizing of what actually happened for such a gap to form in the first place. The container is the revenge of the developer to decades of sysadmin tyranny.But confining this into containers would be a blatant mistake, containers are not the actual issue. They are just the latest iteration of the process of turning computing into a commodity. The immediate buzzword just before the container Docker, was the Cloud. Somewhere in the middle there was BigData, ChatOps, and a few more. There are Distributed Systems and MicroServices also in the rise. The thing is that almost none of them are new concepts and some of them are indeed very old ones.

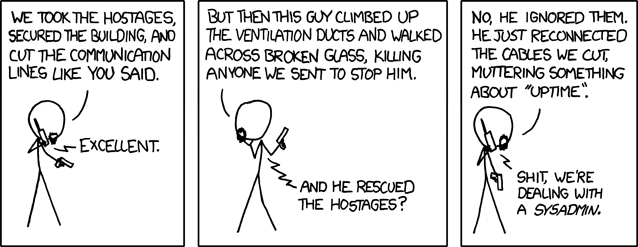

The container is the revenge of the developer to decades of sysadmin tyranny.

But lets not loose the bearing here: There is always at least one machine running at least one application, attached to some storage, and offering one or more services in the network they are connected to. Full stop. It has always been like this, and it will always be like this, a server and a consumer exchanging information in a medium. No matter how many mnemonics and buzzwords you throw in, everything is a manifestation of that model. And no, DevOps is not about Cloud, not about Docker, not about the tools. DevOps is not a product, or a job title. Here is what we are getting it wrong, DevOps is about people interactions, about feedback loops and about working together towards a more agile solution for a given set of requirements. If you really think that you can buy DevOps or that acquiring tools, using containers or subscribing to AWS will make your organization DevOpsy, you did not get it.

Now, let me extend that idea and roll back time, just for a moment.

In the early days of computing, developers and sysadmins would be the very same person or at the least, be in the same team. The system was conceived as a platform, and the whole operative environment for the application to exists. Anybody involved in running such system would have a high degree of understanding of how the different parts worked together. Sure, they would be some more knowledgeable than others about certain parts, but the technical teams were all geared towards a common goal. Later on, with UNIX systems proliferating and becoming more ubiquitous, the role of the sysadmin appears. And progressively, gets detached from anything to do with the applications running in the system. More and more becomes a gatekeeper, almost a guardian, more worried about up-time and system performance than anything else. In the meantime, the developers have also taken advantage of the new situation; the code they write is more and more abstracted from the system, the database, and the network. It is o longer necessary for the developers about the operating system and for the sysadmin to know about the application. Now it is a world of opposites. My up-time versus your features.

I have had the opportunity to work in several sysadmin teams through my life, with a wide range of expertise and in very different environments and for different industries. And yes, the topical nerdy sysadmin, extremely talented but also extremely unsocial, exists.

And, as in many things in life, numbers matter. In any given organization for each sysadmin, you can count at least 10 developers, in various functions. And of course, these guys know about operating systems too! They know how to install Linux, get their libraries and development tools, code and release software. And well, servers nowadays are like big PCs right? What is more, they are just computing units! You go to AWS or any off the shelve service and you get computing units on tap, a shell with root access and an IP address. But wait it is even better, with containers, you do not need to even bother knowing how to package your application for several platforms, you just do it once and that is it, it will run anywhere where the container can. You do not need the sysadmin anymore!

Or do you?

At the end, there is always a physical data center, with a physical computer, storage and network that someone needs to manage.

Here is the thing, your container will be running in a host, probably bare metal. Your EC2 instance is a virtual machine, also running in an hypervisor, also running ultimately in bare metal. Your hosts see each other because there is properly configured network, and while most probably all those are described as code, you need a real network behind, just as you need real storage, real operating systems and real computers. At the end, there is always a physical data center, with a physical computer, storage and network that someone needs to manage.

Now, of course, all these computing cloud solutions, that give you instant machines, containers or even ways to host a function, are using a DevOps approach to run their services, with tons of automation and solid design behind them. But here there is the disconnect: they are another company, another organization, with other teams. You will not be able to negotiate how the VLAN tagging is implemented or what optimizations are there in the storage. Will you be able to have reverse DNS record for your machines? Is the operating system tuned for your services or to optimize their return for what you pay for them?

Ultimately, should you care? That is the big question. Does it matter?

I had also a fun situation years ago, when VMs hosting a Java application would, after a certain amount of time, start running out of memory. The first idea from the developer was to add more memory to the VM. That solved the problem. A little later on, the problem appeared again, so we increased the memory. At this point we had gone from 2Gb to 8Gb, for an application which data-set once started was under 200Mb. And, yes, eventually, a little latter this time, the out of memory hit again. Of course, from the second occurrence the other sysadmins and I suspected that the issue was not with the lack of memory, but how the memory was being handled by the application. Despite bringing this to the table, backed up with evidence, we kept adding memory to those machines, as if memory was infinite.

When we reached the point where we were asked to add 32Gb of RAM the VMs, I had to bring it to management. And here I had my epiphany. Management was very understanding; if they requested more memory, give them more memory. But it is clearly a memory leak! –I added, completely perplexed with the answer –. But of course it is. But adding more memory is cheaper than having a developer fixing the leak.

And so it was. Often throwing money to a problem, that is, adding more of the X resource, is perceived as an OK solution. So we kept doubling the memory until it took months for the application to go out of memory and then we would be asked to reboot. We started monitoring the memory consumption of those systems, and arranging controlled downtime to restart them before they went down by themselves. Beautiful! Now this would be even better, as you have a number of instances running your application, and if one misbehaves, another gets launched, so it does not matter if your code leaks, at long as it does what it is supposed to do, it does not matter how.

And here is where I decided to draw the line. Would we accept flying in a plane that leaks fuel, as long as it flies? Would we accept a meal where part of the food is missing, as long as you get fed?

At what point we gave up quality in the IT industry? The commoditization of hardware and operating systems has definitely played a role in this strange transformation. Two decades ago a memory leak in an application would not be acceptable, downtime was too costly and the impact for customers could have bad consequences for management, hence the sysadmins focus on system up time, stability and controls. Today, in contrast, we are much more accepting for service downtime in most industries, as systems tend to be much more distributed and Load Balancing has finally overtaken High Availability, again giving up at any operating system level improvements and focusing in the application layer.

… the sysadmin is now in the cloud, is no longer a person that can bring your misery, it is only a bot that fires up more hosts in a datacentre to adjust capacity, and does not care if your code leaks memory.

The sysadmin is now an operator

So, all these, the arrival and quick degradation of DevOps, the operative environment as a commodity, the containers, the focus on features versus quality, etc. they are all driven by the developer escaping from the sysadmin. And in my opinion, the silo, the wall, the divide, is back again. Only that the sysadmin is now in the cloud, is no longer a person you can bring your misery, it is only a bot that fires up more hosts in a data center to adjust capacity, and does not care if your code leaks memory.

So, with the sysadmin out of the picture, now there in the cloud, there is no more DevOps as they used to be. Only developers remain, now doing the only part of DevOps that survives, Continuous Integration, Continuous Deployment and automated testing. There is no more intention to fix or tune the machine, now when it is a bit old, you spin up a new one, maybe with new code that hopefully will run better. Configuration management is getting relegated to a mere provisioning step, while the rise of the immutable configuration paradigm raises.

Most sysadmins reading this are most probably be in denial and invoke the magic word, legacy. Sure, you can cling in there, for as long as you can. As a matter fact there are still people doing COBOL, and companies still relying on mainframes. Maybe you think it is nice that old systems survive. I think it is the dramatic evidence of an industry that does not know how to evolve in a normal way and depends on system that work, without understanding how.

DevOps is not caring about sysadmins anymore. You are not seating at the table with the cool kids. You are just managing whatever PasS your boss bought or keeping the Docker Engines up and running.

For the others that have been paying attention, probably you know this already, but here it goes: DevOps is not caring about sysadmins anymore. You are not seating at the table with the cool kids. You are just managing whatever PasS your boss bought or keeping the Docker Engines up and running. How are we going to transform the sysadmin role into something else? Maybe we could start by giving ourselves a new title, like Google did with the SREs.

If you have read this far, you already guessed that I do not like very much the way things are turning to be in our industry, and yes, I have some ideas about how it could be done better. At the end of the day it is a fight between doing what is right and what is convenient. I have talked a lot about how some things have gone off the rails, and not proposed any solutions or alternatives. What is the way to go from here?

That is a retort for another time.

DISCLAIMER: We use data centers as resource, cloud computing and containers when appropriate. We do not prefer a tool over another for what it is but for what it can do and for how it shapes culture in our organization.